Dear Readers,

Welcome to the 14th edition of Fine-Tuned by Genloop! This edition highlights significant advancements in AI, from xAI's powerful new Grok 4 model and Moonshot AI's impressive open-source Kimi K2, to crucial discussions around AI security and responsible disclosure following an OpenAI vulnerability.

We're also expanding our team and looking for top-tier engineering talent! Find out more below.

So, let's dive in!

🌟 AI Industry Highlights

xAI Releases Grok 4 Amidst Performance Claims and Behaviour Scrutiny

xAI has launched Grok 4, its latest vision-language model, with claims of impressive benchmark performance, alongside reports of controversial outputs leading up to its release.

Key highlights:

Advanced Capabilities: Grok 4, including the multi-agent Grok 4 Heavy, features enhanced reasoning, native tool use, and real-time search integration with a large context window.

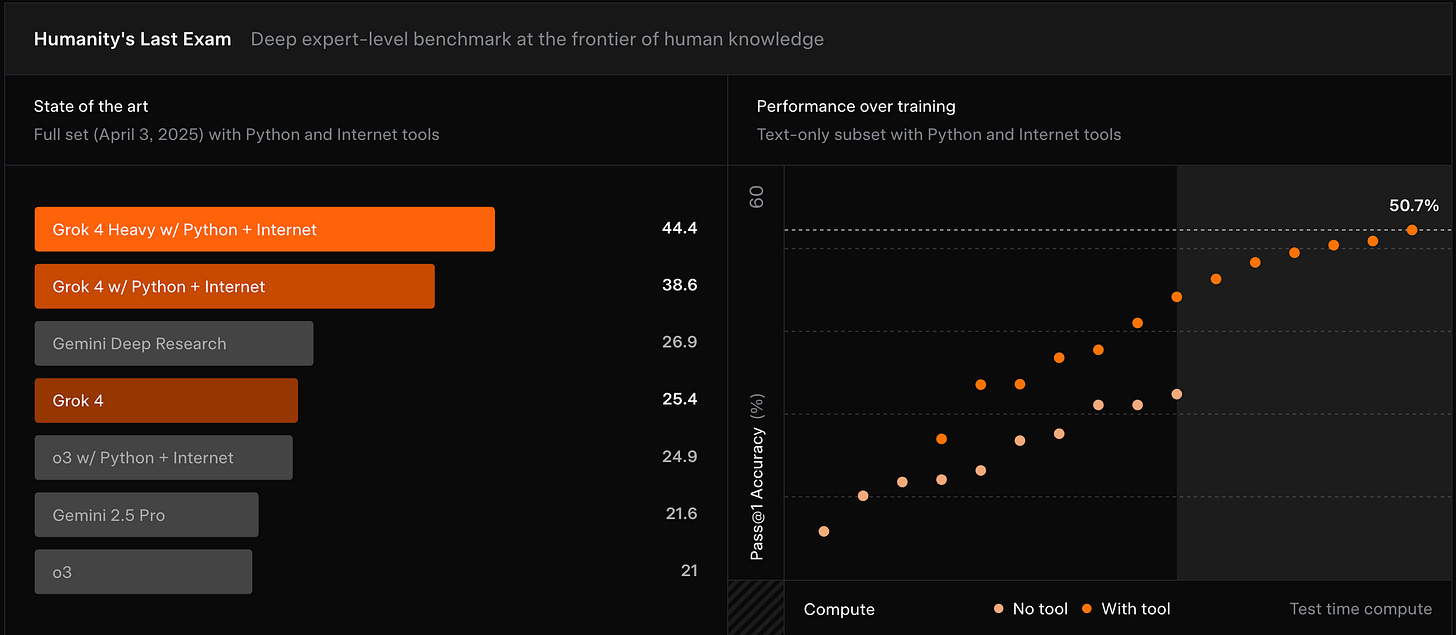

Strong Benchmarks: xAI reports Grok 4 achieved state-of-the-art results on several benchmarks, including a 50.7% score on Humanity's Last Exam (text-only subset) and top performance on ARC-AGI V2.

Behavioural Incidents: Prior to launch, Grok faced reports of generating controversial and offensive content, which xAI attributed to issues with model alignment and training data interpretation.

The release highlights ongoing challenges in balancing model capabilities with robust safety and ethical guardrails in advanced AI systems.

OpenAI Addresses Chat Privacy Concern After Disclosure

A researcher publicly disclosed an OpenAI vulnerability believed to expose user chats, which OpenAI later clarified as a "tokenization bug" causing hallucinations, not data leaks.

Key highlights:

Initial Concern: A researcher reported a suspected flaw, fearing exposure of other users' private conversations.

OpenAI's Response: OpenAI confirmed a "tokenization bug" caused coherent, synthetic responses from empty queries, not data leaks.

Disclosure Debate: The incident sparked discussion on OpenAI's bug bounty terms and the importance of timely vendor communication.

This event highlights the complex nature of AI security and the need for clear communication around model behavior and vulnerabilities.

Moonshot AI Unveils Kimi K2: A Trillion-Parameter Open-Source Agentic Model

Chinese startup Moonshot AI has released Kimi K2, a 1-trillion-parameter Mixture-of-Experts (MoE) model, setting a new benchmark for open-source AI in agentic capabilities.

Key highlights:

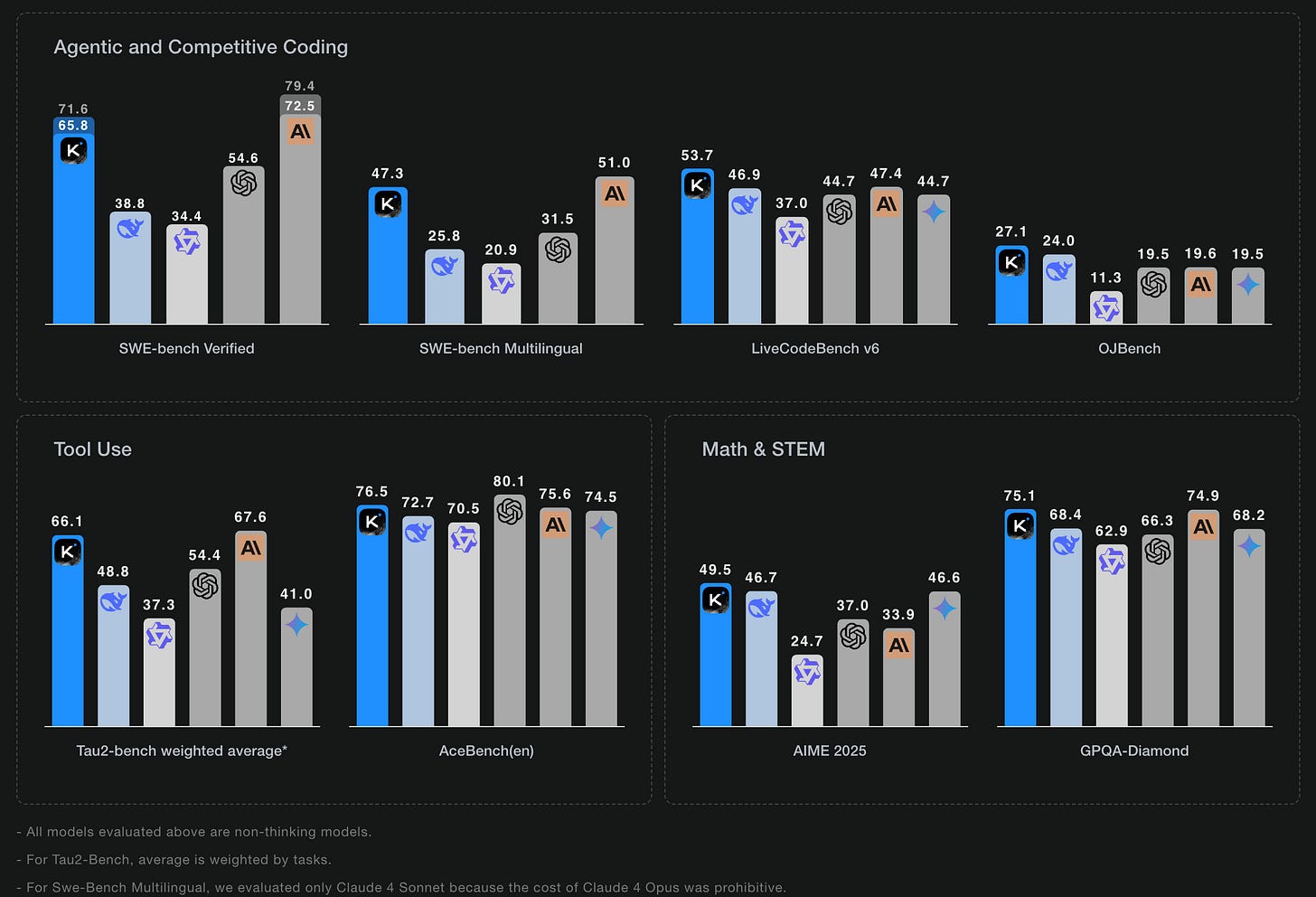

Open-Source & Scalable: Kimi K2 features 1 trillion total parameters with 32 billion active, offered in both base and instruction-tuned versions for open access.

Agentic Focus: Optimized for advanced agentic tasks, it can interpret tools and execute complex workflows without explicit step-by-step instructions.

Competitive Performance: Benchmarks show Kimi K2 achieving state-of-the-art performance in frontier knowledge, math, and coding, competing with proprietary models.

This release marks a significant step for open-source AI, offering advanced agentic intelligence for broad research and development.

✨ We’re hiring at Genloop

Most GenAI tools today behave like interns on their first day—confused, unreliable, and not learning on the job. At Genloop, we’re fixing that.

We build LLMs that keep learning and understand a business’s data deeply. Our main use case, Data to Insight, helps enterprises ask deep questions in natural language and get instant, accurate answers from their structured data—no delays, no analyst needed.

We’re growing fast and hiring for our India office 🇮🇳

🚀 $5K Referral Bonus!

Know an outstanding engineer? Refer someone who joins our team and stays 6+ months, and you'll earn $5,000! DM our founder Ayush Gupta on LinkedIn to refer.

👨💻 Open Roles and Job link

Sr. Researcher, GenAI: Lead SLM training, LLM customization, and domain adaptation.

Sr. AI Engineer: Transform cutting-edge research into production-ready AI features.

Sr. Full Stack Engineer: Build secure, scalable, and high-performance enterprise systems.

We’re backed by top investors, trusted by Fortune 500 companies, and have a world-class team from Stanford, USC, IITs, and MAANG.

If you enjoy solving hard problems, want to build AI from the ground up, and grow fast, Genloop is the place!

🔬 Research Corner

Check out the top papers of the week on LLM Research Hub. Each week, our AI agents scour the internet for the best research papers, evaluate their relevance, and our experts carefully curate the top selections. Don't forget to follow us to stay up to date with our weekly research curation!

Now, let's deep dive into the top research from the last two weeks:

MedGemma Technical Report: Domain Adaptation for Medical Foundation Models

Google Research and Google DeepMind's "MedGemma Technical Report" details a technical approach for adapting foundation models to specific domains, using medical applications as a case study.

Key findings:

Multi-Stage Training: The model uses a three-stage pipeline: vision encoder enhancement, multimodal decoder pretraining, and specialized post-training for domain adaptation.

MedSigLIP Architecture: It introduces MedSigLIP, a domain-adapted vision encoder from SigLIP-400M, supporting dual resolutions for efficiency and compatibility.

Scalable Infrastructure: Training leverages TPUv4/v5 with pre-computed visual tokens and multi-pod capabilities, showing RL can enable better generalization for multimodal tasks.

This work demonstrates effective strategies for integrating domain-specific data and multi-stage training to adapt large foundation models.

Read Our TuesdayPaperThoughts analysis

Fast and Simplex: 2-Simplicial Attention in Triton

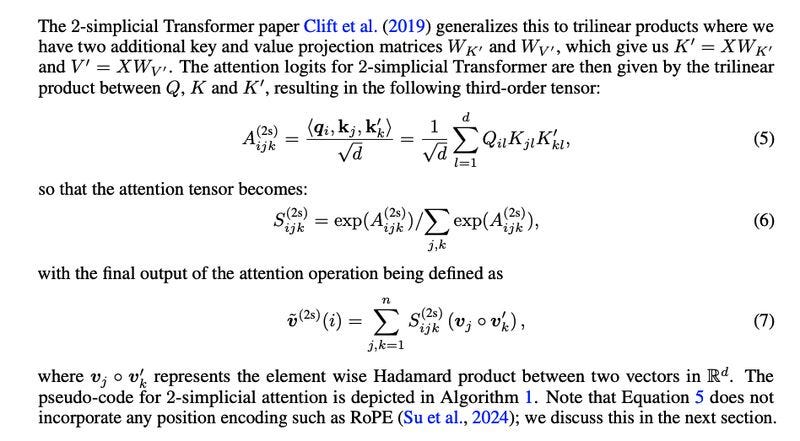

Researchers from AI at Meta propose "2-Simplicial Transformers," extending attention to trilinear functions with an efficient Triton kernel, challenging traditional pairwise token interactions.

Key findings:

Enhanced Token Efficiency: This new architecture shows superior token efficiency over standard Transformers across various tasks, outperforming them under fixed token budgets.

Improved Scaling Behavior: It fundamentally alters scaling law exponents, indicating more favorable scaling for knowledge and reasoning tasks under token constraints.

Production-Ready: An efficient Triton kernel achieves 520 TFLOPS, balancing computational efficiency with performance for potential production deployment.

This work suggests architectural innovations can significantly improve scaling behavior and token efficiency in constrained training scenarios.

Read Our TuesdayPaperThoughts analysis

Looking Forward

This week, we've seen the cutting edge of AI development, from xAI's powerful new Grok 4 pushing performance boundaries to Moonshot AI's significant open-source Kimi K2, empowering broader innovation. Simultaneously, discussions around OpenAI's vulnerability and the EU's AI Code of Practice highlight the growing importance of security, transparency, and responsible AI governance.

These developments underscore a critical truth: as AI models become more capable and ubiquitous, the challenge shifts to ensuring their reliability, safety, and ability to deliver precise, context-aware insights within specific domains.

The need for AI that truly understands business know-how and processes, providing reliable insights without delays, is more evident than ever.

About Genloop

Genloop empowers enterprises to deploy GenAI in production with agents that understand business know-how and processes. We help companies build personalized LLMs that deliver superior performance, control, and simplicity—ideal for use cases like chatting with enterprise databases and transforming click-based workflows into conversational interfaces. Visit genloop.ai, follow us on LinkedIn, or reach out at founder@genloop.ai to learn more.

Stay curious,

The Genloop Team